Unveiling Neurophysiological Gender Disparities in Bodily Emotion Processing

- 1. Department of Military Medical Psychology, Fourth Military Medical University, 710032, Xi’an, China

- 2. Mental Health Education and Consultation Center, Tarim University, Alaer, 443300, China

- 3. Military Psychology Section, Logistics University of PAP, Tianjin, 300309, China

- 4. Military Mental Health Services & Research Center, Tianjin, 300309, China

- 5. College of Education Science, Changji University, Changji, Changji 831100, China

- 6. Weinan Vocational and Technical College student office, Weinan 714026, China

- #. These authors contributed equally to the study

Abstract

Background: This study aimed to explore the neural mechanisms underlying gender differences in the process of recognizing emotional expressions conveyed through body language. Using Electroencephalogram (EEG) recordings, we investigated the impact of gender on Event-Related Potentials (ERP) to unveil gender disparities in bodily emotion recognition.

Methods: A total of 34 participants, including 18 males and 16 females, were enrolled in the study. The experiment followed a 2×2 mixed design, with independent variables of gender (male and female) and bodily emotion (happy and sad). Behavioral and EEG data were simultaneously collected.

Results: Gender effects were observed at the VPP stage, and late-stage emotional processing at the LPC stage. Males exhibited more stable patterns of brain activity when identifying various bodily emotions, whereas females displayed more complex and tightly interconnected brain activity networks, particularly when recognizing negative emotions such as sadness. Gender-based differences in the significance of brain regions were also identified, with males showing greater importance in central brain areas and females exhibiting a higher level of significance in the parietal lobe.

Conclusion: Gender disparities influence bodily emotion recognition to a certain extent, although these differences are not absolute. It is essential to emphasize individual variances and the impact of cultural backgrounds. This study contributes to early detection and intervention in understanding the role of gender in cognitive development, thus alleviating the adverse effects on the psycho-logical well-being of both genders in social interactions and interpersonal communication.

Keywords

• Physical emotions

• ERP

• VPP

• LPC

CITATION

Feng T, Wang B, Ren L, Wu L, Li D, et al. (2024) Unveiling Neurophysiological Gender Disparities in Bodily Emotion Processing. JSM Brain Sci 5(1): 1022.

ABBREVIATIONS

EEG: Electroencephalogram; ERP: Event-Related Potentials

BACKGROUND

Accurate recognition of emotional information from facial expressions, body language, vocal cues, and other environmental clues is a fundamental aspect of individual adaptative social interaction [1]. Emotion recognition plays a crucial role in an individual’s social interaction and emotional expression. It involves the sensitive perception and interpretation of emotional signals from others, such as facial expressions, vocal tones, and body language. It serves as the foundation for successful social interactions in everyday life and has significant applications in various clinical and psychosocial domains [2,3]. Some studies suggest that the recognition of emotional states based on bodily postures is often equally accurate, with no significant difference in accuracy compared to facial expressions when identifying emotions in adults [4]. Zongbao L et al [5] used facial and bodily expression videos depicting anger, fear, and joy with different valences as experimental stimuli and conducted multivariate pattern classification analysis of functional connectivity patterns in participants under various emotional conditions using fMRI technology. The results revealed that individuals exhibit distinct neural network representations for facial and bodily emotion stimuli of the same valence, with facial expressions achieving higher accuracy in emotional classification. However, in certain circumstances, bodily cues may convey emotional valence more effectively than facial cues [6]. This could be attributed to the heightened emotion intensity under peak emotional states, making it easier to differentiate between bodily emotions of different valences compared to facial emotions. Furthermore, body language conveys advantages in terms of a larger distinguishable area and greater distance compared to facial expressions [7,8]. Moreover, in the absence of facial information, individuals can accurately recognize emotions by observing brief presentations of dynamic and static bodily stimuli [9]. In summary, these studies provide robust evidence that humans are capable of extracting emotional information from specific bodily postural cues.

In everyday life, people often need to integrate and process both bodily and facial cues, and research indicates that there is mutual influence between the processing of bodily and facial emotions. Zhang D et al [10] conducted a study using a task involving four basic emotions to investigate the impact of facial emotion asynchrony on the recognition of bodily emotions. The results revealed that the perception of facial expressions significantly affects the accuracy and response time of bodily emotion classification. When the emotional categories of body posture and facial expressions are inconsistent, the accuracy of facial emotion classification is significantly reduced, with participants tending to align their judgments of facial expressions with the emotions conveyed by the body [11-15]. Although the aforementioned studies suggest that the addition of bodily information to facial expressions results in a less than 10% improvement in recognition accuracy, Aviezer et al study [16] demonstrates that bodily cues are indispensable for the recognition of intense facial expressions in everyday scenarios, such as exuberance after a successful match or profound sorrow after a defeat. When experimental materials consist solely of facial cues, participants are unable to distinguish between valences.

Neuroscientific research has already indicated that the processing of bodily emotion-al information involves complex neural networks, including visual areas, cortical and subcortical regions associated with emotion processing (such as the amygdala, anterior insula, and orbitofrontal cortex), as well as the prefrontal and cerebellar sensorimotor regions [12,17,18]. These areas, while akin to those activated during facial emotion processing, exhibit unique patterns of activation. Furthermore, during the early stages of processing bodily emotional information, researchers have observed a phenomenon known as motor inhibition. When individuals process joyful and fearful body postures, as op-posed to neutral ones, their motor excitability, as measured by Motor-Evoked Potentials (MEPs) induced through Transcranial Magnetic Stimulation (TMS), decreases. This motor inhibition can be explained by a directed/freezing mechanism in which the cortical brain areas inhibit motor excitability to facilitate monitoring socially relevant signals related to survival [19-22]. However, this motor inhibition phenomenon does not manifest when individuals process emotional facial stimuli [23,24].

There is a compelling need for a more in-depth analysis and exploration of the temporal and spatial processes of bodily emotion processing. While existing Event-Related Potentials studies have shown that emotional body postures and faces can elicit similar ERP components, indicating that individuals rapidly allocate cognitive resources to monitor bodily emotion-related signals, there has been no research analyzing the dimensional information between temporal indicators during the processing of bodily emotional in-formation. A more granular exploration of the relationships between behavioral and electroencephalogram indicator variables during the processing of emotional body information holds significant importance for understanding the specificity of an individual’s processing of bodily emotions in contrast to other types of emotional stimuli. While some studies have covered the general impact of gender in emotion recognition, there is a scarcity of research that concentrates on bodily emotion recognition, with even fewer examining the interplay between ERPs and related variables. This constitutes a significant knowledge gap.

Hence, it is imperative to further investigate the neural mechanisms underlying gen-der differences in emotion processing, specifically using bodily emotions as stimuli. This study employs a bodily emotion category classification task in conjunction with ERP technology to examine differences in the processing mechanisms of different bodily emotions, aiming to validate whether different genders exhibit processing advantages for dis-tinct bodily emotions. The experimental materials utilize the Luo Yuejia China Physical Emotion Material Library (CEPS) [25]. Selected ERP components, including VPP and LPC related to bodily emotion processing, serve as research indicators. Additionally, the study conducts further analyses of temporal features in brainwave data for different genders and valence conditions.

The objective of this study is to explore the impact of gender differences on ERPs in bodily emotion recognition, providing additional clues for elucidating the neural basis of gender differences. By employing high temporal-resolution EEG in conjunction with net-work analysis techniques, the research investigates whether there are gender-based differences in ERPs during bodily emotion recognition tasks and whether these differences reflect unique neural mechanisms related to gender in emotion processing.

METHODS

Participants and ethical statement

This study received approval from the Ethics Committee of the First Affiliated Hospital of the Fourth Military Medical University under the reference number KY20234195-1. The study involved the participation of thirty-six undergraduate and postgraduate students, comprising 18 males, whose ages ranged from 18 to 25 years, with a mean age of 23.65 years. Two participants were excluded from the study due to excessive artifacts. All participants in both experiments were exclusively right-handed, possessed normal or corrected-to-normal vision, and had no known cognitive or neurological disorders. Prior to the experiments, all participants provided written informed consent, in accordance with the principles outlined in the Declaration of Helsinki.

Material

We employed a 2 × 2 mixed-participation factorial design in this experiment. The first factor was the trial condition, categorized by gender (Male vs. Female), and the second factor pertained to the emotional expression conveyed by the body, specifically happiness or sadness. The stimuli were comprised of 120 images sourced from the Chinese Library of Physical Emotional Materials [25], with valence and intensity ratings conducted using a 9-point Likert scale [26]. Among these stimuli, there were 18 images depicting positive physical emotions and 52 images depicting negative physical emotions. One hundred participants were selected to rate the type and intensity of these emotional body pictures, with an equal male-to-female ratio (1:1) and an average age of 22.8 years. To ensure parity, the happy and sad stimuli were meticulously matched based on intensity levels [mean (M) ± Standard Deviation (SD): happy = 4.89 ± 0.72; sad =3.73 ± 0.74]. On the display screen, each image occupied a visual angle of 4.93° (horizontally) × 5.99° (vertically), viewed from a distance of 65 cm.

Procedure

All participants provided their informed consent by signing a consent form prior to participating in the experiment. Prior to commencing the experiment, participants were informed that the computer screen would display a series of stimuli, comprising both negative and positive body emotional pictures. They were instructed to respond promptly to these stimuli. E-Prime 3.0 software was utilized to randomly present pictures depicting negative and positive body emotions. The procedure commenced with the presentation of instructions. Once the participant was prepared to begin, the practice trial was conducted, which was divided into 20 trials, followed by a formal trial consisting of 120 trials, divided into two parts. Each trial initiated with a fixation point (+) displayed at the bottom center of the screen for 500 ms, followed by a random blank screen for 300-500 ms. Subsequently, a stimulus picture was presented for 1000 ms, during which the participant was required to determine whether the emotion depicted was a negative or positive limb. These responses corresponded to the keys F and J, respectively. The picture disappeared upon keypress, followed by a stimulus interval lasting 800-1000 ms. The trial was divided into two blocks, separated by a 5 minute break, with the formal recording of the Event-Related Potentials (ERPs) taking approximately 15 minutes. The allocation of the left and right hand buttons was balanced evenly among participants.

EEG recording and data analysis

Raw EEG data underwent offline preprocessing using EEGLAB [27] in Neuroscan. Data were re-referenced to M1+M2/2, continuous EEG data were band-pass filtered be-tween 1 and 30 Hz, and segmented into epochs with a time window of 1000 ms, spanning from 200 ms pre-stimulus to 800 ms post-stimulus. Baseline correction was applied using the pre-stimulus interval (-200 to 0 ms). Trials affected by eye blinks and movements were corrected using an independent component analysis algorithm.

The analysis focused on cue-elicited responses over the frontocentral and parietal electrodes (Fz, Cz, Pz), indexing the VPP and LPC components (time windows: 150–170 and 450–600 ms, respectively).

For all ANOVAs, the significance level was set at alpha = 0.05, and ANOVAs were supplemented by either Bonferroni pairwise or simple main effects comparisons where appropriate. Greenhouse-Geisser correction was applied for all effects with two or more degrees of freedom in the numerator. Please note that all repeated measures ANOVA results are reported with uncorrected degrees of freedom, but with corrected p values.

RESULTS

Descriptive statistics

The range, average scores and standard deviations of individual symptoms are depicted in table 1.

Table 1: Descriptive statistics of ACC and RT (N = 36)

|

|

ACC |

RT |

ACC |

RT |

||||

|

|

Female |

Male |

Female |

Male |

Happy |

Sad |

Happy |

Sad |

|

Mean |

0.96 |

0.94 |

593.88 |

573.19 |

0.97 |

0.96 |

589.89 |

581.54 |

|

SD |

0.19 |

0.24 |

109.92 |

100.74 |

0.18 |

0.24 |

106.12 |

107.47 |

Note: Trials with incorrect responses (3.1%) and trials with resp time faster than 100 ms or slower than 1,000 ms, or that deviated more than 2.5 SD from the cell mean (3.27%) were excluded from further analysis.

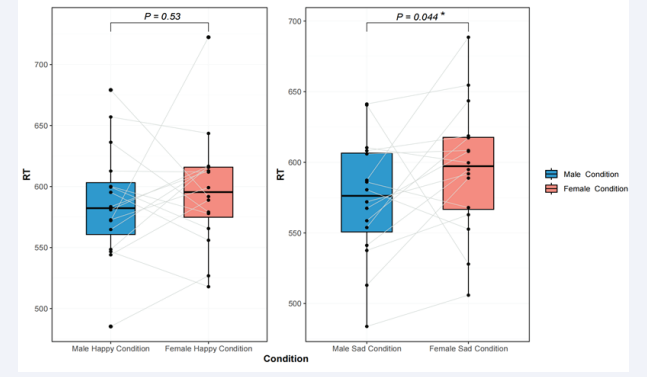

We conducted an repeated measures ANOVA on the RT and ACC for experiment, with body emotion (happiness, or sadness) as within participant factors. With gender (male, or female) as between participant factors. Experiment revealed a main effect for body emotion, [F(1, 34) = 5.37, p < 0.05, ηp2 = 0.52], with higher ACC in the body sad condition (M = 92.4 %) than in the body happy condition (M = 96.7 %). Additionally, there was a main effect for gender, [F(1, 34) = 3.69, p < 0.05, ηp2 = 0.66]. Bonferroni-corrected pairwise comparisons revealed ACC to be higher to male (M = 93.2 %) than to female (M = 95.8 %; p < 0.05); The interaction was not significant, F(1, 34) < 1. The difference in RT under the sad condition is significant, p < 0.05, with males having shorter RT than females.

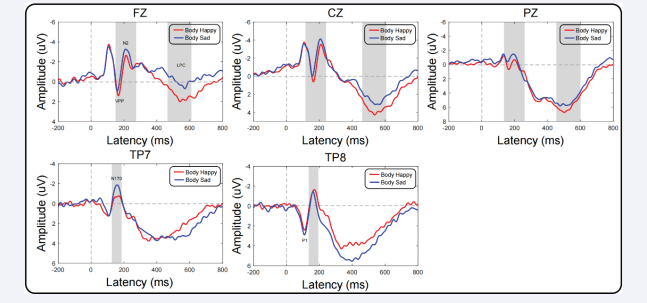

Time domain analyses

Figure 1 presents time-locked ERP responses to target onset recorded from central electrodes, revealing the VPP and LPC components. Additionally, Conditions are depicted in figure 1. In the two emotional priming conditions, target-related ERPs exhibit a positive component spanning from 150 ms to 170 ms (VPP) over the central area, central, and parietal regions. This is followed by a positive component from 450 to 600 ms (LPC).

Figure 1: Boxplot chart of RT for males and females under different conditions.

VPP component: Significant main effects of gender were noted for VPP amplitude [F(1,34) = 3.67, p < 0.05, ηp2 = 0.05]. Female participants (1.22 ± 0.15 μV) exhibited a more positive VPP component than male participants (0.36 ± 0.08 μV). In terms of VPP latency, there was a main effect for body emotion type [F(1,34) = 7.80, p < 0.001, ηp2 = 0.03]. Body sad stimuli (160.16 ± 1.89 ms) elicited a faster ERP component than body happy stimuli (162.67 ± 1.69 ms). Furthermore, an interaction between gender and body emotion was observed [F(1,34) = 4.07, p < 0.05, ηp2 = 0.02]. Simple effects analysis revealed significant differences in body sad conditions [F(1,34) = 4.98, p < 0.05], with further analysis indicating that females (157.67 ± 1.67 ms) recognized body sad stimuli significantly faster than males (162.67 ± 2.10 ms). Figure S1 (Supplementary Material).

LPC component: The main effect of brain region showed significant differences in LPC amplitude [F(1,34) = 73.08, p < 0.00, ηp2 = 0.26]. Subsequent post hoc analysis revealed significant variations in LPC amplitude among different brain regions, with Pz (5.95 ± 0.19 μV) leading, followed by Cz (3.74 ± 0.05 μV), and Fz (1.14 ± 0.07 μV). An interaction between body emotion and brain region was also observed [F(1,34) = 5.86, p < 0.001, ηp2 = 0.04]. Simple effects analysis unveiled significant differences across brain regions. Further analysis subsequently revealed that in the body happy condition, Pz (6.07 ± 0.21 μV) showed the highest amplitude, followed by Cz (3.54 ± 0.13 μV), and Fz (1.59 ± 0.07 μV). In the body sad condition, Pz (5.83 ± 0.12 μV) exhibited the highest amplitude, followed by Cz (3.32 ± 0.11 μV), and Fz (0.68 ± 0.09 μV).

For all ANOVAs, the significance level was set at alpha = 0.05, and ANOVAs were supplemented with either Bonferroni pairwise comparisons or simple main effects analyses where appropriate. Greenhouse-Geisser correction was applied to all effects with two or more degrees of freedom in the numerator. It is important to note that all results of the repeated measures ANOVA are reported with uncorrected degrees of freedom but with corrected p-values (Figure 2).

Figure 2: Male and Female targetrelated ERP waveforms in Body Happy and Body Sad conditions recorded at Tp7, Tp8, Fz, Cz, and Pz. In the left and right brain regions and midline, voltage scalp maps of all peaks in each condition are shown.

DISCUSSION

This study’s results indicate that gender significantly influences the ERP in bodily emotion recognition. Behavioral outcomes reveal that individuals exhibit a higher accuracy in recognizing positive body expressions compared to negative conditions, contrary to the negative bias theory supported by prior research [28]. This contrast could be at-tributed to the tendency in previous studies to employ negative conditions (such as fear) in contrast to neutral expressions as stimulus materials [29,30]. Past research has also shown that recognizing negative emotions is often more challenging than positive ones. Even in studies focused on recognizing positive emotions, a sole emphasis on behavioral data may lead to a ceiling effect, making it difficult to discern group differences [31,32]. Which aligns with the behavioral results of this study. Furthermore, regardless of the stimulus type, females exhibited a higher accuracy in recognizing bodily expressions compared to males, indicating that females possess an advantage in processing bodily emotional information. These findings support the ‘attachment facilitation’ theory, which suggests that females, in comparison to males, have processing advantages for emotions across all categories. This advantage is believed to be an adaptation for females to react more swiftly and accurately to infants’ emotional needs as caregivers, thereby promoting the establishment of secure attachment relationships [33].

For the VPP component, we observed a significant gender effect. Regardless of stim-ulus valence, females elicited more positive VPP amplitudes compared to males. Additionally, under negative conditions, the latency of VPP in females was shorter than in males, indicating that females can recognize bodily expressions more rapidly and sensitively. This processing advantage is particularly pronounced when processing negative information. Prior research has shown that gender differences in processing negative emotions are more pronounced and stable compared to positive emotions [34]. Some re-searchers argue that the processing advantage females exhibit for negative emotional stimuli is the primary reason for their higher susceptibility to emotional disorders com-pared to males [35].

The emotional processing advantage in females can be explained from socio-cultural and evolutionary perspectives. Historically, women have been responsible for caregiving, particularly during the pre-linguistic stages of development, necessitating the ability to recognize emotional expressions and potential threats to their offspring. Consequently, women are more adept at identifying various emotions, place greater importance on discerning the psychological states of others, facilitating communication, enhancing emotional bonding, and protecting their social group. This results in heightened sensitivity and accuracy when recognizing and assessing emotional cues in others [36]. From a physiological perspective, females have a larger orbitofrontal cortex volume, which is closely associated with emotional experience, cognitive evaluation, and control processes. They also have a larger proportion of gray matter within unit volume, related to cognitive processes such as speech and emotional processing.

Differences exist between males and females in the recognition of bodily emotions. Specifically, males tend to emphasize the speed and direction of movements when recognizing body language, while females focus more on subtle changes in facial expressions and body postures. These differences may be related to the lateralization differences in in-formation processing in the male and female brain. It’s important to note that these differences should not be viewed as absolute, as individual variances and cultural disparities may also influence the ability to recognize bodily emotions. Furthermore, these dis-tinctions do not imply that one gender is superior to the other in the recognition of bodily emotions. On the contrary, both abilities are crucial and play different roles in various contexts.

In recent years, numerous studies have explored gender differences in the recognition of bodily emotions. These studies employ a range of methods, including behavioral assessments, neuroimaging, and neuroelectrophysiology. Through network analysis, this study has identified distinct differences in bodily emotion recognition between males and females. Both genders can accurately recognize most emotions, and this recognition ability is not significantly different between sexes. Males exhibit more robust brain activity patterns when identifying various bodily emotions, while females demonstrate a more intricate and densely connected network structure, particularly in the recognition of negative emotions like sadness. These findings highlight that females have more complex and tightly interconnected processes when identifying sadness. In terms of brain regions, males exhibit greater importance in the central area, whereas females show higher significance in the parietal region. Beyond gender factors, individual differences and cultural variations can also influence the ability to recognize bodily emotions. For instance, some individuals may be more sensitive to specific emotions, while various cultures have different norms and expectations regarding emotional expressions.

In summary, there are some differences between males and females in the recognition of bodily emotions. The network analysis provides a more granular look at their inherent interrelationships. However, these differences are not absolute and should not be exaggerated. Instead, we should emphasize individual variances and cultural backgrounds to gain a more comprehensive understanding of the nature of bodily emotion recognition abilities.

CONCLUSION

This study employed network analysis to investigate the interaction of brain activity during limb emotion recognition between males and females. Our temporal domain findings revealed gender effects at the VPP stage and late-stage emotional processing at the LPC stage. Network analysis results underscored that females exhibit more intricate and tighter connections in recognizing sad emotions, outlining that males have relatively stable network structures across different emotions. The most critical core indicators for males were found in the central brain area, while females exhibited them in the parietal lobe. These findings may contribute to the early detection and intervention of gen-der-specific roles in cognitive development, alleviating the adverse effects on the psycho-logical well-being of both genders in social interactions and interpersonal communication.

DECLARATIONS

Acknowledgement

We would like to thank all the individuals who participated in the study. We also thank all the administrative staff and teachers in the university who help us with the recruitment.

Authors’ contributions

T.F., B.W. and D.L. proposed the facility idea and scheme of this project. T.F., B.W., D.L., M.M., L.R., L.W. and Y.W. conducted the research and collected the raw data. T.F. and L.R. analyzed the data. T.F. and B.W. drafted the manuscript. H.W. and X.L. revised the manuscript and took responsibility for the integrity of the data and the accuracy of the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

Air Force equipment comprehensive research key project (KJ2022A000415), Major military logistics research projects (AKJWS221J001).

Availability of data and materials

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Ethics approval and consent to participate

This study was conducted in accordance with the ethical standards put forth in the Declaration of Helsinki. The study design and procedures were reviewed and approved by the Independent Ethics Committee of the First Affiliated Hospital of the Fourth Military Medical University (No. KY20234195-1).

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

REFERENCES

- Rosenberg N, Ihme K, Lichev V, Sacher J, Rufer M, Grabe HJ, et al. Alexithymia and automatic processing of facial emotions: behavioral and neural findings. BMC Neurosci. 2020; 21: 23.

- Atkinson AP, Dittrich WH, Gemmell AJ, Young AW. Emotion perception from dynamic and static body expressions in point-light and full-light displays. Perception. 2004; 33: 717-746.

- Atkinson AP, Tunstall ML, Dittrich WH. Evidence for distinct contributions of form and motion information to the recognition of emotions from body gestures. Cognition. 2007; 104: 59-72.

- Zieber N, Kangas A, Hock A, Bhatt RS. Infants’ perception of emotion from body movements. Child Dev. 2014; 85: 675-684.

- Zongbao L, Yun Y, Guangzhen Z, Yuankui Y, Wenming Z, Ruixin C. The recognition of bodily expression: An event-related potential study. Studies of Psychology and Behavior. 2019; 17: 318-325.

- Aviezer H, Trope Y, Todorov A. Body cues, not facial expressions, discriminate between intense positive and negative emotions. Science. 2012; 338: 1225-1229.

- de Gelder B. Why bodies? Twelve reasons for including bodily expressions in affective neuroscience. Philos Trans R Soc Lond B Biol Sci. 2009; 364: 3475-3484.

- de Gelder B, Van den Stock J, Meeren HK, Sinke CB, Kret ME, Tamietto M. Standing up for the body. Recent progress in uncovering the networks involved in the perception of bodies and bodily expressions. Neurosci Biobehav Rev. 2010; 34: 513-527.

- Irvin KM, Bell DJ, Steinley D, Bartholow BD. The thrill of victory: Savoring positive affect, psychophysiological reward processing, and symptoms of depression. Emotion. 2022; 22: 1281-1293.

- Dandan Z, Ting Z, Yunzhe L, Yuming C. Comparison of facial expressions and body expressions: An event-related potential study. Acta Psychologica Sinica. 2015; 47: 963-970.

- Willis ML, Palermo R, Burke D. Judging approachability on the face of it: the influence of face and body expressions on the perception of approachability. Emotion. 2011; 11: 514-523.

- Meeren HK, van Heijnsbergen CC, de Gelder B. Rapid perceptual integration of facial expression and emotional body language. Proc Natl Acad Sci U S A. 2005; 102: 16518-16523.

- Aviezer H, Bentin S, Dudarev V, Hassin RR. The automaticity of emotional face-context integration. Emotion. 2011; 11: 1406-14.

- Aviezer H, Hassin RR, Ryan J, Grady C, Susskind J, Anderson A, et al. Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychol Sci. 2008; 19: 724-732.

- Gu Y, Mai X, Luo YJ. Do bodily expressions compete with facial expressions? Time course of integration of emotional signals from the face and the body. PLoS One. 2013; 8: e66762.

- Aviezer H, Messinger DS, Zangvil S, Mattson WI, Gangi DN, Todorov A. Thrill of victory or agony of defeat? Perceivers fail to utilize information in facial movements. Emotion. 2015; 15: 791-797.

- Ferrari C, Ciricugno A, Urgesi C, Cattaneo Z. Cerebellar contribution to emotional body language perception: A TMS study. Soc Cogn Affect Neurosci. 2019; 17:81-90.

- Tamietto M, de Gelder B. Neural bases of the non-conscious perception of emotional signals. Nat Rev Neurosci. 2010; 11: 697-709.

- Fanselow MS. Neural organization of the defensive behavior system responsible for fear. Psychon Bull Rev. 1994; 1: 429-438.

- Frijda NH. Impulsive action and motivation. Biol Psychol. 2010; 84:570-579.

- Gainotti G, Keenan JP. Editorial: Emotional lateralization and psychopathology. Front Psychiatry. 2023; 14: 1231283.

- Hagenaars MA, Oitzl M, Roelofs K. Updating freeze: Aligning animal and human research. Neurosci Biobehav Rev. 2014; 47:165-176.

- De Schutter E. Fallacies of Mice Experiments. Neuroinformatics. 2019; 17: 181-183.

- Borgomaneri S, Vitale F, Avenanti A. Early motor reactivity to observed human body postures is affected by body expression, not gender. Neuropsychologia. 2020; 146: 107541.

- Yuejia L, Tingting W, Ruoli G. Research progress on brain mechanism of emotion and cognition. Journal of the Chinese Academy of Sciences. 2012; 27: 31-41.

- Luo W, Feng W, He W, Wang NY, Luo YJ. Three stages of facial expression processing: ERP study with rapid serial visual presentation. Neuroimage. 2010; 49: 1857-1867.

- Kalburgi SN, Kleinert T, Aryan D, Nash K, Schiller B, Koenig T. MICROSTATELAB: The EEGLAB toolbox for resting-state microstate analysis. Brain Topogr. 2024; 37: 621-645.

- McRae K, Gross JJ. Emotion regulation. Emotion. 2020; 20: 1-9.

- Van den Stock J, Vandenbulcke M, Sinke CB, de Gelder B. Affective scenes influence fear perception of individual body expressions. Hum Brain Mapp. 2014; 35: 492-502.

- Hagenaars MA, Oitzl M, Roelofs K. Updating freeze: Aligning animal and human research. Neurosci Biobehav Rev. 2014; 47: 165-176.

- Shriver LH, Dollar JM, Calkins SD, Keane SP, Shanahan L, Wideman L. Emotional eating in adolescence: Effects of emotion regulation, weight status and negative body image. Nutrients. 2020; 13: 79.

- Walenda A, Bogusz K, Kopera M, akubczyk A, Wojnar M, Kucharska K. Emotion regulation in binge eating disorder. Psychiatr Pol. 2021; 55: 1433-1448.

- Addabbo M, Turati C. Binding actions and emotions in the infant’s brain. Soc Neurosci. 2020; 15: 470-476.

- Lambrecht L, Kreifelts B, Wildgruber D. Gender differences in emotion recognition: Impact of sensory modality and emotional category. Cogn Emot. 2014; 28: 452-469.

- Sorinas J, Ferrández JM, Fernandez E. Brain and body emotional responses: Multimodal approximation for valence classification. Sensors. 2020; 20: 313.

- Polá?ková Šolcová I, La?ev A. Differences in male and female subjective experience and physiological reactions to emotional stimuli. Int J Psychophysiol. 2017; 117: 75-82.